I. Buckle Up: Your Brain-Friendly Guide to Autonomous Cars

Ever wondered what your future self-driving taxi is actually thinking? Or how it avoids that rogue squirrel better than you can? It’s a question that sits at the intersection of science fiction and daily reality, and the answers are far more intricate than you might imagine.

Autonomous cars aren’t just fancy gadgets; they’re a symphony of Artificial Intelligence (AI) and a whole orchestra of sensors working in perfect, or at least striving for perfect, harmony. It’s a complex system, with each sensor acting as a specialized instrument, feeding data into the AI conductor that orchestrates the vehicle’s movements.

The ultimate goal? To kick human error (the villain in most road accidents) out of the driver’s seat for good. The promise is tantalizing: safer roads, fewer accidents, and perhaps even the liberation of our time spent commuting. Imagine a world where traffic jams become moments of productivity or relaxation, not sources of stress.

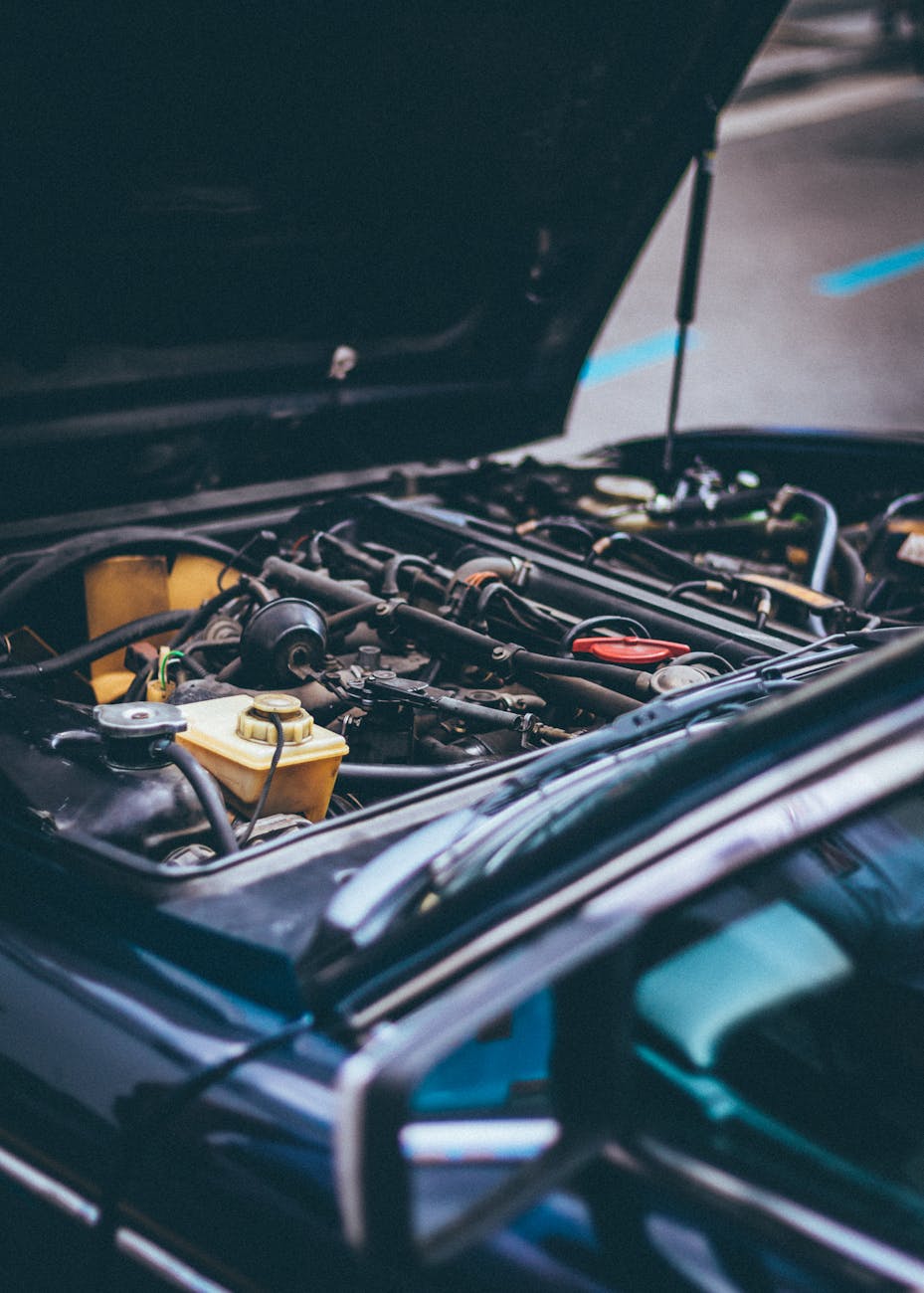

Let’s take a peek under the hood. We’ll explore the ‘eyes and ears’ (sensors) and the ‘brain’ (AI) that make these futuristic vehicles tick – and sometimes, glitch. It’s a journey into the heart of a technological revolution that promises to reshape our world, but not without raising some profound questions along the way.

II. From Da Vinci to Deep Learning: A Self-Driving Saga

The quest for autonomous vehicles isn’t a recent fad; it’s a story that stretches back centuries, woven with the threads of visionary thinking and relentless innovation.

Believe it or not, the idea of a self-propelled cart goes all the way back to Leonardo da Vinci. His sketches hint at a fascination with automated movement, a seed of an idea that would lie dormant for centuries. Even Nikola Tesla dabbled in remote-controlled car prophecies in 1898! These early pioneers were dreamers, imagining a world where machines could move independently.

Remember those sci-fi visions of automated highways? GM’s Firebird II in 1956 tried to make it real. Then came the Stanford Cart in the ’60s, learning to navigate with basic cameras. These were the early glimmers, the first sparks of what would become a technological inferno.

The term “AI” emerged, and by the ’80s, folks like Ernst Dickmanns had Mercedes vans driving themselves at 55 mph. The 90s brought neural networks into play (“No Hands Across America” in ’95!), and the DARPA Grand Challenge in the 2000s supercharged the race. These were the awkward teenage years of AI, filled with promise and occasional stumbles.

Fast forward to now, and deep learning has truly transformed the landscape, powering giants like Waymo and Tesla’s “Autopilot.” It’s a testament to human ingenuity, a relentless pursuit of a dream that began with sketches on parchment and has culminated in algorithms that can navigate complex urban environments.

III. The Tech Talk: How Autonomous Cars “See” and “Think”

Let’s demystify the magic. How do these machines perceive the world, and how do they make decisions? It’s a fascinating blend of sensory input and computational power.

The Sensory Superheroes (Their “Eyes & Ears”):

- LiDAR: The laser light show that creates precise 3D maps. Think of it as Superman’s x-ray vision, perfect for detailed object detection, though a bit shy in bad weather. LiDAR paints a point cloud picture of the environment, allowing the car to “see” with remarkable accuracy.

- Radar: Radio waves cutting through fog and darkness. This is the car’s echolocation, great for long distances and adverse conditions, but not as good at telling a car from a cardboard box. Radar is the reliable workhorse, providing constant awareness even when visibility is poor.

- Cameras: The vehicle’s high-res eyeballs. They read signs, spot pedestrians, and understand traffic lights, but like us, they struggle in glare or heavy rain. Cameras are the eyes that interpret the world in familiar terms, recognizing objects and patterns with increasing sophistication.

- Ultrasonic Sensors: Your parking assistant’s best friend. Short-range sound waves for tight squeezes and avoiding fender benders. Ultrasonic sensors are the close-range guardians, preventing those minor scrapes that can be so irritating.

The AI Brainpower (The “Decision Maker”):

- Perception & Understanding: AI sifts through all that sensor data to build a real-time, 360-degree picture of the world. “Is that a child or a trash can?” This is where the AI begins to make sense of the raw data, identifying objects and predicting their behavior.

- Sensor Fusion (The Master Blender): This is where AI truly shines, combining all the different sensor inputs to create one super-reliable, redundant view. It’s like having multiple senses working in perfect harmony, covering each other’s blind spots.

- Decision Making & Path Planning: Using machine learning, the AI predicts what other drivers might do and plans the safest route, constantly updating. “Brake now? Swerve? Patience, grasshopper.” This is the heart of the autonomous system, where decisions are made based on a complex interplay of factors.

- Learning & Adapting: Like a perpetually enrolled student, the AI learns from every mile driven (real or simulated) to get smarter and safer over time. This is the key to continuous improvement, as the AI refines its understanding of the world and its ability to navigate it safely.

IV. The Bumpy Road Ahead: Opinions, Fears, and Fierce Debates

The technology may be impressive, but the road to widespread adoption is paved with skepticism, ethical dilemmas, and unanswered questions.

Public Perception: A Hesitant Head-Nod (Mostly Nods “No”):

- Fear Factor: Most people are still pretty spooked by fully driverless cars (68% afraid in 2024!). Safety is the #1 concern. This fear is understandable, as it challenges our fundamental sense of control and safety.

- Trust Issues: Many don’t believe AVs are safer than humans and deeply distrust cars without steering wheels. “What if it goes rogue?!” This lack of trust stems from a lack of transparency and a fear of the unknown.

- Glitches & Hacks: Concerns about software bugs, loss of control, and cyberattacks are very real. These are legitimate concerns, as any complex system is vulnerable to failure and malicious intent.

- A Glimmer of Hope: Some younger, tech-savvy folks are more open, and direct experience can actually boost confidence. This suggests that familiarity and exposure can help to overcome fear and build trust.

Expert Views: Cautious Optimism (But “Better Than Human” is the Bar):

- Level 5 is Distant: Experts agree true Level 5 autonomy (no human intervention, ever) isn’t around the corner. This acknowledgement of the challenges ahead is crucial for managing expectations and fostering realistic goals.

- Human Error’s Downfall: They emphasize that AVs could drastically reduce accidents by eliminating human mistakes (90%+ of crashes). This is the ultimate promise of autonomous vehicles, and it’s a powerful motivator for continued development.

- The High Bar: But to be truly accepted, AVs must be “much better than a human driver” (who’s right 99% of the time). That’s a tough standard! This sets a high bar for safety and reliability, but it’s a necessary condition for widespread acceptance.

- Cybersecurity Nightmare: Experts also sound the alarm on hacking vulnerabilities – imagining a malicious actor taking control is a scary thought. This is a critical issue that must be addressed to ensure the safety and security of autonomous vehicles.

Controversies & Ethical Head-Scratchers:

- The Trolley Problem on Wheels: Who dies in an unavoidable accident? Passengers or pedestrians? Programmers are essentially playing God, and there’s no universal moral code. This is a deeply unsettling question that forces us to confront the limits of artificial intelligence and the complexities of human morality.

- Whose Fault Is It Anyway? When an AV crashes, who’s liable? The driver, the manufacturer, the programmer, or the AI itself? Legal frameworks are still catching up. This lack of clarity creates uncertainty and hinders the development of a robust legal framework for autonomous vehicles.

- Privacy Pitfalls: These cars collect a lot of data. Who owns it? How is it used? Big Brother on four wheels? This raises serious concerns about privacy and data security, as autonomous vehicles have the potential to collect vast amounts of personal information.

- Job Apocalypse? Millions of driving jobs could be displaced, sparking major economic and social shifts. This is a significant economic and social challenge that must be addressed proactively to mitigate the potential negative impacts.

- Marketing Hype vs. Reality: Terms like “Full Self-Driving” can create dangerous over-reliance, leading to accidents when drivers check out prematurely. This highlights the importance of clear and accurate communication to prevent misuse and ensure safety.

V. The Road Ahead: What’s Next for Autonomous Tech?

The journey is far from over. Innovation continues at a breakneck pace, pushing the boundaries of what’s possible.

Smarter Brains (AI Evolution):

- Generative AI: Imagine AI creating endless realistic (but fake!) driving scenarios for training, preparing cars for rare “edge cases.” This could dramatically accelerate the training process and improve the robustness of autonomous systems.

- Edge AI: The car’s brain getting even faster, processing data right on board, not needing to call home to the cloud. This would reduce latency and improve the responsiveness of autonomous vehicles in critical situations.

- Explainable AI (XAI): No more black boxes! We’ll know why the AI made a certain decision, boosting trust and helping accident investigations. This is essential for building trust and ensuring accountability in the event of an accident.

- Multimodal AI: Learning from everything – images, sound, text – for a truly holistic understanding. This would allow autonomous vehicles to learn from a wider range of data sources and improve their ability to navigate complex environments.

Sharper Senses (Sensor Superpowers):

- Solid-State LiDAR: Smaller, cheaper, more durable lasers giving cars “real vision” in all conditions. This would make LiDAR more accessible and reliable, improving the performance of autonomous vehicles in a wider range of conditions.

- 4D Imaging Radar: Not just “where” but “what” it is and “how fast” in 3D, even “seeing” around corners! This would provide more detailed and accurate information about the environment, improving the safety and reliability of autonomous vehicles.

- Gigapixel Cameras & 5D Vision: Cameras so sharp they capture minuscule details, combining space, time, and motion for ultimate prediction. This would enable autonomous vehicles to “see” the world with unprecedented clarity and accuracy, improving their ability to anticipate and avoid potential hazards.

- Beyond the Horizon: Specialized infrared cameras for true night vision, miniaturized sensors for seamless integration. These advancements would further enhance the capabilities of autonomous vehicles and expand their operational range.

Connected Cars, Smart Cities (The V2X Revolution):

- Talking Traffic: Cars communicating with each other (V2V) and with infrastructure (V2I) through 5G. Think traffic lights telling your car to prepare to stop, or cars warning each other of hazards around a blind bend. This would create a more connected and intelligent transportation system, improving traffic flow and safety.

- Traffic Flow & Safety: This interconnected web could prevent hundreds of thousands of crashes annually and dramatically reduce congestion. The potential benefits of V2X technology are enormous, and it could revolutionize the way we travel.

The Grand Vision:

- Higher Autonomy Levels: More cars will reach Level 3 (conditional automation) and Level 4 (high automation) in coming years, moving closer to the fully driverless dream. This gradual progression towards full autonomy will allow us to adapt to the technology and address any unforeseen challenges.

- Ethical & Regulatory Roadmaps: Continued collaboration to establish global ethical guidelines and legal frameworks will be key to unlocking widespread adoption. This is essential for ensuring that autonomous vehicles are developed and deployed in a responsible and ethical manner.

- Environmental Wins: Self-driving electric cars could mean cleaner air and less wasted fuel. The combination of autonomous technology and electric vehicles has the potential to create a more sustainable transportation system.

VI. The Journey Continues: Towards a Safer, Smarter Drive

Autonomous cars, powered by cutting-edge AI and an arsenal of sensors, promise a future where roads are safer, and commutes are smoother. It’s a vision that’s both exciting and daunting, filled with potential and fraught with challenges.

But it’s not just about the tech; it’s about earning public trust, tackling tricky ethical questions, and ensuring these intelligent machines truly benefit society. The human element is crucial, as we must shape the technology to align with our values and aspirations.

Leave a comment